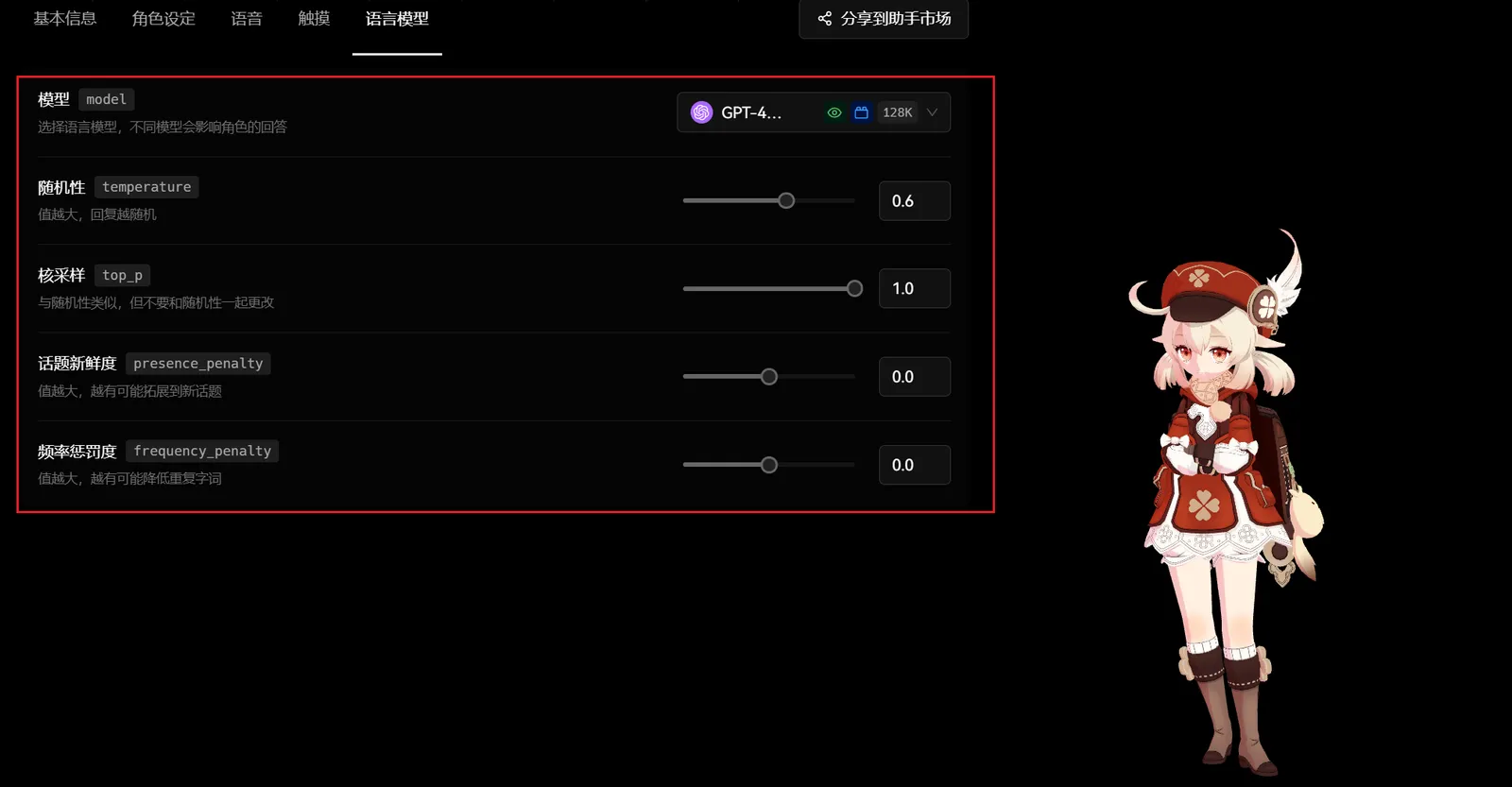

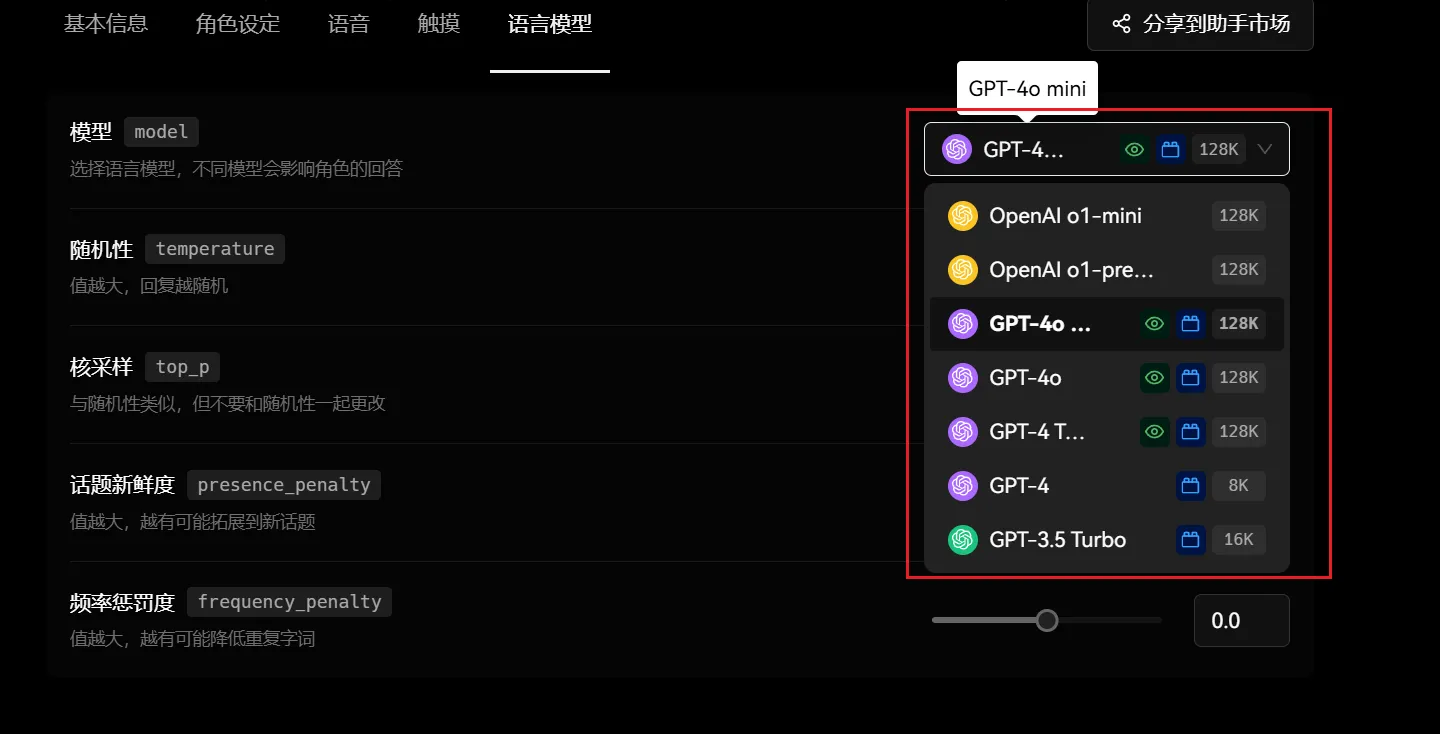

Model model

The model refers to the selection of different large model service providers and their specific model parameters. Choosing different language models can significantly impact the character’s responses. Different models may vary in language understanding, generation capabilities, accuracy, and style. For instance, some models may excel at handling questions in specific domains, while others may perform better in generating creative responses.

Currently, LobeVidol supports OpenAI API calls:

Randomness temperature

This parameter controls the degree of randomness in the responses. When the value is higher, the model generates answers that are more random, potentially providing more unusual and creative responses, but it may also lead to a decrease in accuracy. Conversely, when the value is lower, the model’s responses tend to be more certain and conservative, leaning towards common and reliable answers.

Nucleus Sampling top_p

Similar to randomness, this parameter also affects the diversity and uncertainty of the model’s generated responses. However, it should not be adjusted together with the randomness parameter, as their effects overlap to some extent, and simultaneous adjustments may lead to unpredictable results.

Topic Freshness presence_penalty

This parameter controls the model’s tendency to expand into new topics when answering questions. A higher value increases the likelihood that the model will introduce new topics or viewpoints, making the responses richer and more diverse. However, if the value is set too high, it may cause the responses to stray from the core of the question.